7 Biggest Privacy Concerns Around Facial Recognition Technology

Facial recognition technology is rapidly becoming a ubiquitous part of modern life, powering everything from smartphone security features to advanced surveillance systems. While its applications offer convenience and enhanced security, the widespread use of facial recognition also raises significant privacy concerns. As this technology continues to evolve and integrate into various aspects of society, it is crucial to examine the potential risks it poses to personal privacy.

This blog post will explore the seven biggest privacy concerns surrounding facial recognition technology. These concerns highlight the need for careful consideration and robust safeguards to ensure that the benefits of facial recognition do not come at the cost of individual rights and freedoms. From issues of consent and transparency to the dangers of data breaches and commercial exploitation, understanding these challenges is essential in navigating the complex landscape of facial recognition in today's digital age.

Improper data storage

At the core of facial recognition technology is the collection and storage of biometric data—unique identifiers such as facial features that distinguish one individual from another. Unlike other forms of data, biometric information is inherently personal and permanent. If it is compromised, the consequences are far-reaching and cannot be easily mitigated. For example, while a password can be changed after a breach, a person's facial structure cannot.

Improper data storage practices can expose this sensitive information to unauthorized access, either through accidental leaks or deliberate cyberattacks. Without robust security measures in place, facial recognition data is vulnerable to being hacked, stolen, or otherwise misused. This can lead to identity theft, fraud, and even surveillance by malicious actors, undermining trust in the technology and the institutions that use it.

Furthermore, improper storage can also involve keeping data for longer than necessary or storing it in a manner that makes it difficult to delete. This raises concerns about data retention policies and the right to be forgotten. If individuals' biometric data is stored indefinitely, it increases the risk of future breaches and misuse, long after the original purpose for collecting the data has passed.

Erosion of Anonymity

One of the most significant concerns is the erosion of anonymity in public spaces. The ability to move about freely, without the fear of being constantly monitored or identified, is a cornerstone of personal privacy. However, with the widespread deployment of facial recognition systems, this fundamental aspect of privacy is under threat.

Traditionally, people have enjoyed a certain level of anonymity when in public. Whether walking down a busy street, attending a protest, or simply shopping at a mall, individuals could expect a degree of privacy simply by blending into the crowd. However, facial recognition technology fundamentally alters this dynamic.

Cameras equipped with facial recognition capabilities can scan and identify individuals in real time, often without their knowledge or consent. This creates a situation where anonymity in public spaces becomes nearly impossible. Every movement, every interaction can be recorded and linked back to a person’s identity, stripping away the protective layer of privacy that anonymity once provided.

This loss of anonymity poses particular challenges for activities that depend on the ability to remain unidentified. For example, participating in protests or demonstrations, which are vital components of free expression and democratic engagement, becomes riskier when facial recognition is in use. Individuals may feel deterred from exercising their rights due to fear of being tracked or targeted later.

Lack of Consent and Transparency

One of the most troubling aspects of facial recognition technology is its often-invisible presence. In many instances, facial recognition systems are installed and used without the public's awareness. Whether it's cameras in public parks, airports, or retail stores, individuals are frequently scanned and analyzed without knowing that their facial data is being captured and processed.

This lack of transparency is problematic for several reasons. First, it deprives people of the opportunity to make informed decisions about their privacy. When individuals are unaware that their faces are being scanned, they cannot opt out or take steps to protect their personal information. This covert use of technology can erode trust between the public and the institutions that deploy these systems, as people may feel that their privacy is being violated without their consent.

Moreover, the use of facial recognition without public knowledge raises concerns about accountability. Without transparency, it becomes difficult to determine who is responsible for managing and protecting the collected data, how long it is stored, and for what purposes it is used. This lack of oversight can lead to misuse or abuse of the technology, with potentially severe consequences for individuals' privacy and civil liberties.

Additionally, obtaining informed consent poses significant challenges. Even when efforts are made to inform the public about the use of facial recognition technology, obtaining truly informed consent is challenging. In many cases, the information provided to individuals is insufficient or too complex to allow for an informed decision. For instance, notices about facial recognition use might be buried in lengthy terms and conditions or posted in obscure locations, making it unlikely that people will read or fully understand them.

Additionally, the concept of informed consent in the context of facial recognition is complicated by the nature of the technology itself. Unlike other forms of data collection, where individuals might have more control over what information they share, facial recognition captures biometric data passively. Simply being in a space where the technology is in use can result in the collection of personal data, often without any direct interaction or explicit consent from the individual.

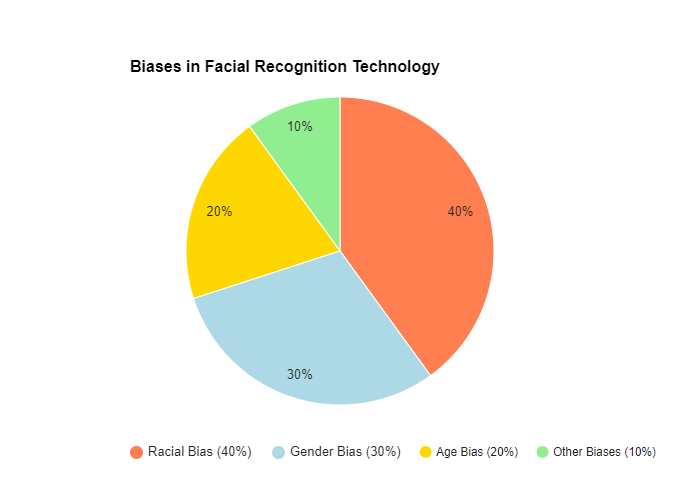

Discrimination and Bias

One of the most documented and alarming biases in facial recognition technology is racial bias. Studies have consistently shown that facial recognition systems tend to have higher error rates when identifying individuals with darker skin tones. These errors manifest as both false positives—incorrectly identifying someone as another person—and false negatives—failing to recognize someone entirely.

This racial bias stems from the datasets used to train these algorithms. Many facial recognition systems are trained on datasets that lack sufficient diversity, often containing a higher proportion of images of lighter-skinned individuals. As a result, the algorithms become more accurate at recognizing lighter-skinned faces while struggling to accurately identify people of color. This discrepancy can lead to serious consequences, particularly in law enforcement, where misidentification could result in wrongful arrests or detainment.

Gender bias is another significant issue in facial recognition technology. Research has shown that these systems often have higher error rates when identifying women, particularly women of color. Similar to racial bias, this issue is largely due to the training datasets, which may not include a balanced representation of different genders. Additionally, facial recognition algorithms might struggle with the wide range of facial features and expressions that can vary across genders. This gender bias can have far-reaching implications. In surveillance and security settings, for example, women may be misidentified more frequently than men, leading to unequal treatment or even exclusion from certain services.

Age bias in facial recognition technology is another concern, with algorithms often performing less accurately on older individuals. This issue arises because many facial recognition systems are trained primarily on images of younger adults, leading to a lack of precision when identifying older people. Age-related changes in facial features, such as wrinkles or skin elasticity, can make it more challenging for these systems to correctly identify older individuals.

Misidentification and Inaccuracy

Facial recognition technology, despite its advancements, is far from perfect. One of the most significant challenges it faces is the issue of misidentification and inaccuracy. These errors, which manifest as false positives and false negatives, can have serious consequences for individuals and raise broader concerns about the reliability and fairness of the technology.

A false positive occurs when facial recognition technology incorrectly identifies a person as someone else. This type of error can lead to severe consequences, particularly in high-stakes situations like law enforcement. For instance, if a facial recognition system mistakenly identifies an innocent person as a suspect in a criminal investigation, that individual may be subjected to unwarranted scrutiny, questioning, or even arrest. The psychological and reputational damage caused by such errors can be profound, impacting a person’s life long after the mistake is corrected.

False positives are especially problematic because they undermine trust in the technology. When people are wrongly identified, it not only damages their personal lives but also erodes public confidence in the systems that rely on facial recognition. This lack of trust can hinder the adoption of potentially beneficial applications of the technology, such as in security or personalized services, where accuracy is crucial.

On the other hand, false negatives occur when the technology fails to recognize or match a face that should be identified. While this might seem less harmful than a false positive, it can still have significant negative impacts. For instance, in security settings, a false negative could mean that a known threat is not detected, potentially leading to serious security breaches. In other scenarios, such as identifying missing persons or tracking known criminals, false negatives can impede important investigative efforts, delaying justice or rescue operations.

Commercial Exploitation

Facial recognition technology has become a powerful tool, not just for security and law enforcement but increasingly for commercial purposes. As businesses realize the potential of this technology, concerns are growing about the ways in which facial recognition data is being used, particularly in marketing and advertising. The commercialization of this data raises significant privacy issues, especially regarding the monetization of personal information.

In the world of marketing and advertising, data is king. Companies are always seeking new ways to better understand their customers, target ads more effectively, and personalize the consumer experience. Facial recognition technology offers a new frontier in this pursuit, enabling businesses to collect and analyze biometric data to gain insights into consumer behavior.

For instance, facial recognition cameras in stores can analyze customers' facial expressions as they interact with products, providing real-time feedback on their reactions. This data can then be used to tailor advertisements and product recommendations specifically to each individual, creating a highly personalized shopping experience. Similarly, digital billboards equipped with facial recognition can change their content based on the demographics or even the mood of passersby, making advertisements more relevant and engaging.

The monetization of such personal data raises several concerns. First, it can lead to a loss of privacy as individuals' biometric information is bought and sold without their knowledge or consent. This data can be used to create detailed profiles that go far beyond traditional demographic information, potentially including insights into a person's emotional state, health, or even lifestyle choices. The prospect of companies having this level of insight into individuals' lives is deeply unsettling for many.

Second, the commodification of facial recognition data can exacerbate inequalities. Companies with access to large amounts of biometric data may have a competitive advantage, allowing them to target consumers more effectively and potentially manipulate purchasing behavior. Meanwhile, consumers, especially those from vulnerable groups, may be disproportionately targeted or exploited based on the data collected about them.

Finally, the lack of regulation around the commercial use of facial recognition data is a major concern. As companies push the boundaries of what is possible with this technology, there is a growing need for clear guidelines on how this data can be used, shared, and sold. Without such regulations, there is a risk that the commercialization of facial recognition technology will continue to outpace the protections put in place to safeguard consumer privacy.

Facial Recognition might Become Normalized

Normalization of facial recognition technology also raises the risk of widespread surveillance becoming more entrenched in society. As facial recognition systems become more common, there is a danger that they could be implemented in an increasing number of areas, from schools and workplaces to public transportation and social events. What begins as a security measure in high-risk areas could quickly expand into a tool for monitoring everyday activities, with little oversight or accountability.

The potential for abuse in such a scenario is significant. Governments and corporations could use facial recognition technology to track individuals' movements, monitor their associations, and even predict their behavior based on past patterns. This level of surveillance could be used to stifle dissent, target specific groups, or manipulate public opinion. Without strong legal protections and clear limits on how facial recognition can be used, the normalization of this technology could lead to a society where privacy is virtually nonexistent.

To prevent the normalization of facial recognition technology from eroding privacy, it is crucial to remain vigilant and advocate for strong regulations. Policymakers need to establish clear guidelines that govern the use of facial recognition, ensuring that it is deployed only when absolutely necessary and in ways that respect individual rights. Public awareness and education are also key to resisting normalization—by understanding the implications of facial recognition technology, individuals can make informed choices about its use and demand greater transparency from those who deploy it.

What solutions are available?

Stronger Regulations and Legal Frameworks

One of the most effective ways to address privacy concerns is through the establishment of robust legal frameworks. Governments can implement regulations that specifically govern the use of facial recognition technology, ensuring that it is deployed transparently and ethically. These laws can mandate the necessity of informed consent, limit the duration of data storage, and impose strict penalties for misuse or data breaches. By setting clear standards, these regulations can help protect individuals' privacy and provide accountability for organizations that use the technology.

Enhanced Data Security Measures

To prevent unauthorized access and data breaches, organizations must adopt rigorous data security measures. This includes encryption of facial recognition data both in transit and at rest, regular security audits, and the use of advanced authentication methods to control access to sensitive information. Implementing these security protocols can significantly reduce the risk of data being compromised, ensuring that biometric information is kept safe from cyber threats.

Privacy-Enhancing Technologies

The development and deployment of privacy-enhancing technologies can also play a crucial role in addressing privacy concerns. For instance, techniques like differential privacy or federated learning can allow organizations to use facial recognition data without directly accessing or storing raw biometric information. These approaches help protect individual identities while still enabling the technology to function effectively.

Transparency and Public Awareness

Transparency is key to building trust and ensuring the ethical use of facial recognition technology. Organizations should be open about where and how the technology is used, clearly communicating its purpose to the public. This can be achieved through public disclosures, visible signage in areas where facial recognition is in operation, and easily accessible privacy policies. Additionally, raising public awareness about how the technology works and its potential risks can empower individuals to make informed decisions about their participation.

Bias Mitigation Strategies

Addressing bias in facial recognition systems is essential to ensuring fair and equitable outcomes. Developers and organizations can employ bias mitigation strategies, such as training algorithms on diverse datasets that represent various demographics. Regularly auditing and testing these systems for accuracy across different population groups can help reduce the likelihood of misidentification and ensure that the technology is fair and reliable for everyone.

Limited and Purpose-Driven Use

Another approach to mitigating privacy concerns is to limit the use of facial recognition technology to specific, well-defined purposes. Organizations should avoid the temptation to deploy facial recognition broadly or for unrelated objectives. By using the technology only where it is genuinely necessary and beneficial, and where less invasive alternatives are not available, the risks to privacy can be minimized.

Conclusion

Facial recognition technology offers remarkable capabilities, from enhancing security to streamlining daily tasks. However, as its use becomes more widespread, it also raises significant privacy concerns that cannot be ignored. From the risk of data breaches and improper storage to issues of misidentification, commercial exploitation, and the erosion of anonymity, the potential for harm is substantial. The lack of transparency and challenges in obtaining informed consent further complicate the ethical landscape, leaving individuals vulnerable to the misuse of their most personal information.

These seven privacy concerns highlight the urgent need for stronger regulations, better security practices, and more ethical approaches to the deployment of facial recognition technology. As society navigates the balance between technological advancement and individual rights, it is crucial to prioritize privacy and ensure that the benefits of facial recognition do not come at the cost of personal freedom and security.

In a world increasingly shaped by digital innovation, safeguarding privacy is more important than ever. By addressing these concerns head-on, we can work towards a future where facial recognition technology is used responsibly, with respect for the rights and dignity of all individuals.